Apple's chip designers aren't some kind of wizards, who are magically much more competent at drafting chips than everyone else in the industry. They achieve magnitudes of better performance per watt, because they only build chips with arm64 CPU cores and metal GPU cores. And they can do that, because the software team rewrote the entire foundation of macOS for version 11 Big Sur. And Photoshop and Blender have also been recompiled to run natively on these new chips. New software written for new instruction sets allows for a more efficient implementation of CPU and GPU cores. Apple didn't waste money for Blender supporting Metal, they unlocked the power of Apple Silicon and thus enabled the 16" MacBook Pro with M2 Max to outperform the Mac Studio with M1 Ultra even while running on battery power! It's ****ing amazing and you keep denying it.

Got a tip for us?

Let us know

Become a MacRumors Supporter for $50/year with no ads, ability to filter front page stories, and private forums.

Devious marketing of M2

- Thread starter MacsAre1

- Start date

- Sort by reaction score

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Apple's chip designers aren't some kind of wizards, who are magically much more competent at drafting chips than everyone else in the industry.

Nope, they are not wizards. It's just that they have spend over a decade designing a chip with the focus on ultra-low power consumption instead of ultra-high clock like what x86 folks did. So Apple has a very healthy head-start here. What also helps is the fact that they can throw much more money at the problem which allows them to use huge caches and other internal structures. AMD64 definitely helps, but not because it is "RISC" (whatever you think it means) but because it's modern ISA designed from ground up to be efficient to decode and to bundle individual operations in a way that they can be efficiently utilised by a state of the art OOE CPU. And of course, it also helps that Apple limits their SIMD performance and cuts down the cache bandwidth compared to x86 CPUs, which saves Apple a lot of power.

They achieve magnitudes of better performance per watt, because they only build chips with arm64 CPU cores and metal GPU cores. And they can do that, because the software team rewrote the entire foundation of macOS for version 11 Big Sur.

Now you lost me. What does it have to do with "metal GPU cores" (again, whatever you think that means). And of course they didn't "rewrite the entire foundation of macOS". They've had that stuff running on ARM since the beginning — it's called iOS.

And Photoshop and Blender have also been recompiled to run natively on these new chips. New software written for new instruction sets allows for a more efficient implementation of CPU and GPU cores. Apple didn't waste money for Blender supporting Metal, they unlocked the power of Apple Silicon and thus enabled the 16" MacBook Pro with M2 Max to outperform the Mac Studio with M1 Ultra even while running on battery power! It's ****ing amazing and you keep denying it.

Ok? The only stuff I am arguing agains are your explanations. As I wrote before, I understand that you are a fan of Apple Silicon — as am I by the way, it's the most exiting thing to have happen to computing in a long while — but just because you are a fan it doesn't mean that your explanations are correct or appropriate.

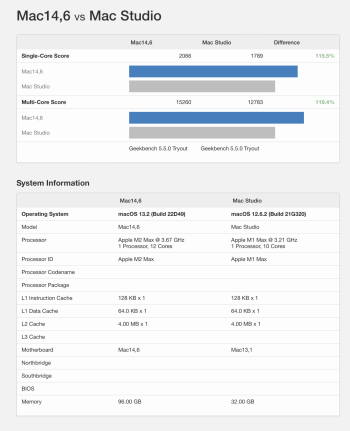

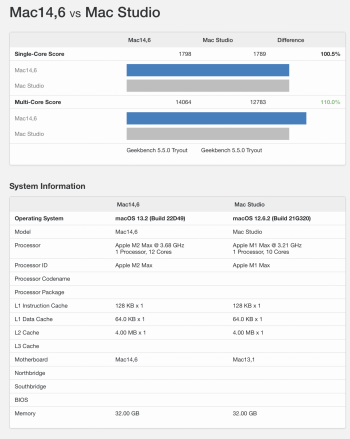

Hi, agree 100% same weird numbers with the m1max, here you have mi numbers with my computer vs two m2 max configurations from geenbeck. Its a marginal improve, Let`s see the 3nmm apple silicon in the future.Some sneaky marketing on Apple's part... This article points out that they're comparing the M2 Mac Mini to an older computer (2 generations back) and claiming it's 5x faster than this "bestselling PC." That's pretty devious. How does it compare to a current gen Intel CPU of a similar price? Apple's ARM chips sound great, but if they fudge the numbers here, where else are they fudging the numbers? I'm not sure if I can believe them.

The Mac Mini page also compares everything with the i7 Mac Mini as the baseline... Which came out in 2018 with a year old Intel CPU (8th Gen)... So compared to 5 generations ago I'd expect anything newer to be faster. In their benchmarks they include an i7 iMac, which is from 2020 and uses a 3 generations old Intel chip from 2019. I'd expect any chip in the same class to be faster today than one from 3-5 years ago. Notably, on some of the functions listed the M1 Mini was slower than the i7 iMac. The M2 beats them all... So congratulations, the M2 is finally faster than a Mac from 2020. But how does it compare with its true competition, a current PC running on the latest Intel or AMD chipsets? You can tout nm all you want but if the performance isn't there, what's the point? Power usage is a big selling point for the laptops but few people care about power usage on a desktop.

Apple, cut the crap and give us some real numbers to compare you performance with the competition.

Apple's performance comparisons to Windows PCs continue to be hilarious and ridiculous

The fruit company just can't help itself, can it?www.windowscentral.com

Attachments

Unless literally no game studios adopt it. Then it doesn’t matter how superior the code and product is. No one is using it.Because Metal is far superior. It follows the same RISC-approach for GPU instructions as ARM did for CPU cores. The result is a much better performance per watt. That's what you want in battery-powered devices like smartphones, tablets and laptops.

Here listen again:

Unless literally no game studios adopt it. Then it doesn’t matter how superior the code and product is. No one is using it.

Any Unity game out there (and there are a bunch) is using it. A few custom engines are using it. Sure, the selection of games available for the Mac is embarrassingly small, but there are plenty of both indy and big name games running in Apple Silicon without issues.

Unless literally no game studios adopt it. Then it doesn’t matter how superior the code and product is. No one is using it.

You said it all, bro.

Is there any information on Apple's GPU instruction set? Is it very different from that of PC GPU manufacturers?it's easier to implement on silicon, because the instruction set is reduced to the core of what you really need to describe graphical calculations.

Is there any information on Apple's GPU instruction set? Is it very different from that of PC GPU manufacturers?

Metal Overview - Apple Developer

Metal powers hardware-accelerated graphics on Apple platforms by providing a low-overhead API, rich shading language, tight integration between graphics and compute, and an unparalleled suite of GPU profiling and debugging tools.

Metal 3 is only the latest revision, not the last. The important part is that Apple controls both Metal and the GPU chip design on which it runs. This allows them to change one or the other or both to make them work together more efficiently.

That website only has documentation for the GPU API, not for the GPU ISA.Metal 3 is only the latest revision, not the last.

You can see an example of GPU ISA on the AMD website.

AMD GPU architecture programming documentation - AMD GPUOpen

A repository of AMD Instruction Set Architecture (ISA) and Micro Engine Scheduler (MES) firmware documentation

You keep repeating the same nonsense without ever thinking about it. The first iPhone (2007) started out with a 1.400 mAh battery. Now the 16" MacBook Pro comes with a 99.6 watt-hours battery, a heat pipe and two fans. Apple did not focus on low-power consumption, but on efficiency (performance per watt) while only ever increasing power consumption and performance. ARM chips were always low-power (because they are Advanced RISC Machines Ltd.) and Apple changed that to high-power and high-performance.Nope, they are not wizards. It's just that they have spend over a decade designing a chip with the focus on ultra-low power consumption instead of ultra-high clock like what x86 folks did. So Apple has a very healthy head-start here.

Because that's the abstraction layer programmers are concerned with. How the API calls are translated into instructions and executed on the silicon is nobody's business but Apple. They're going to change that, whenever they see opportunity for improvement and efficiency gains.That website only has documentation for the GPU API, not for the GPU ISA.

You keep repeating the same nonsense without ever thinking about it. The first iPhone (2007) started out with a 1.400 mAh battery. Now the 16" MacBook Pro comes with a 99.6 watt-hours battery, a heat pipe and two fans. Apple did not focus on low-power consumption, but on efficiency (performance per watt) while only ever increasing power consumption and performance.

And yet the CPU per-core power consumption is essentially the same. You are confusing individual design targets with the full system design targets. So far, Apple designs their CPU cores with a 5W thermal envelope in mind, and their goal is maximising the performance without blowing this envelope. After all, the same core has to work on mobile and desktop. Same goes for the GPU. It's just that desktop configurations pack many more of those cores together, which is why the total power is significantly higher.

I do hope that Apple will start increasing the power consumption a bit to differentiate better between the phone and desktop lines. M2 GPU for example seems to be designed more with desktop in mind (M1 was still mobile-first). But we will see.

ARM chips were always low-power (because they are Advanced RISC Machines Ltd.) and Apple changed that to high-power and high-performance.

ARM chips were always low-power because those are the applications ARM has focused on. Intel had no interest in the low-power market, exclusively designing high-power CPUs. Funnily enough this decision came back to bite them, as they run into issues with power-scaling their designs and lack the technology to make them more efficient. The irony of all this is that current Intel designs are still based on Pentium Pro/Pentium M family of cores which were known for their power efficiency. At the end of the day, designing for power efficiency trumps just going for it. You make smarter solutions from the start when you know that you are restricted.

But again, none of this has anything to do with the fact that ARM has the word "RISC" in their name. ARM ISA went though multiple iterations, and current 64-bit ARM is a huge departure from the old ARM1/2/3 instruction sets. I would even go as far as to claim that Aarch64 is the best ISA currently on the planet. It got it all, it's smartly designed and it makes the job for the CPU easier. But again, nothing to do with traditional understanding of "RISC". I mean, I would be happy to explain this to you in more detail if you tell us what you think makes RISC special.

Is there any information on Apple's GPU instruction set? Is it very different from that of PC GPU manufacturers?

We do know quite a bit thanks to Asahi folks and especially Dougall Johnson's excellent work on reverse-engineering Apple's GPU ISA. You can find the gory details here: https://dougallj.github.io/applegpu/docs.html

In short, Apple GPUs use variable-length instructions (from 2 bytes to 12 bytes per instruction) and are fairly standard SIMD/SIMT style parallel processors. The execution model is basically the same as what AMD uses (32-wide SIMD). Apple's approach to control flow (SIMT) is kind of cool. That's about it. What's fairly notable is that Apple's GPU seems to be much simpler compared to Nvidia's or AMD's. There doesn't seem to be a special function unit, no dedicated scalar unit, just plain old-school SIMD with individual lane threading. Even the fixed-function hardware is very limited, it basically deals with rasterisation, primitive assembly and shader dispatch. Things like interpolation etc. is just done in compute shaders, which makes the hardware much simpler and easier to work with.

Last edited:

as far as i remember in my laymen's terms, RISC in the past often meant being incredibly fast in floating point operations, while being average (if not even sucky) at integer.

floating point performance is great for stuff like physics, or 3D calculations, but low integer performance meant poor(er) performance for a lot of stuff like running an actual OS.

but as far as i'm understanding, current "RISC" architectures, at least the very performant ones, aren't strictly RISC anymore, and "CISC" architectures aren't strictly CISC either and it's more that both are having both now

"RISC" and "CISC" were simply two labels for how CPUs used to be designed forty years ago. In a "CISC" CPU the instruction was used to select microcode (a tiny routine stored on the CPU) and it was the microcode that was actually executed. The idea was to give programmers more tools to work with and minimise the program size. In a "CISC" CPU each instruction could take different numbers of steps to execute. In a "RISC" CPU, there was no microcode and the program instructions would directly map to the CPU internal hardware logic. This made program execution more predictable, eliminated the microcode (which occupied a large part of the CPU) and could make CPUs potentially faster. Both of these design styles offered different tradeoffs in times when memory was extremely expensive and the number of transistors designers could use was limited.

Nowadays these labels are probably much less useful, as CPU design has evolved and became much more complex. Contemporary concerts are very different from what was important in the 70-ties and 80-ties. Some of the ARM CPUs use microcode while most x86 instructions do not use microcode. Modern ARM contains very complex, specialised instructions that contains multiple smaller operations. For example, a single instruction can be used to increment a register by a constant as well as load two values from memory addresses by that register and store them in other two registers. And of course the hardware needs multiple steps to run that instruction. How is this in the spirit of the original "RISC".

I believe that instead of relying on imprecise terms like "RISC" and "CISC" to characterise architectures, one needs to study the design details and discuss what the instruction sets have in common and how they differ. Blanket statements like "RISC is faster" or "RISC is more power-efficient" are not helpful and only obfuscate the complex reality of CPU design.

More nonsense! First of all the iPhone never needed a fan, so it fits well into any thermal envelope. And with high-performance and high-efficiency cores there are at least two power consumption levels. But more importantly, the process node, battery capacity and core count change with every new iPhone generation. So it's absolute nonsense to target a specific per core power consumption. The whole system must be in a good power-vs-performance balance to deliver so-called "all-day battery life". How many hours that means remains vague. The cores rarely run at 100% utilization and consume far less than the maximum anyway. Apple's design goal is that the consumer experience is that overnight charging is enough. They don't give a **** about any artificial 5W limit.And yet the CPU per-core power consumption is essentially the same. You are confusing individual design targets with the full system design targets. So far, Apple designs their CPU cores with a 5W thermal envelope in mind, and their goal is maximizing the performance without blowing this envelope. After all, the same core has to work on mobile and desktop.

The nonsense continues. RISC started as an experiment. Nobody knew how big the efficiency gains of a reduced instruction set might be. They discovered by accident that their new chips even ran at incredible low power. At the beginning this was nothing but a curiosity. Initially ARM wanted to build more powerful desktop CPUs. They didn't target the low-power market, because such a market didn't exist in volumes back then. The Newton, iPods and cellphones only created demand for millions of low-power chips after ARM made it possible to build powerful handheld devices.ARM chips were always low-power because those are the applications ARM has focused on. Intel had no interest in the low-power market, exclusively designing high-power CPUs.

Yeah, because now it developed into a 64-bit, multicore architecture, capable to compete in the desktop market. But the basis underneath is still RISC. − You can take a reliable Formula 1 car and tune it to be faster and still reliable. But you can't start with a fast but unreliable motor and add on reliability later. − It's not enough to invest infinite time and money to make x86 as efficient as arm64. You need to cut out the rarely used instructions, which only bloat the design. You need to become incompatible with your entire software ecosystem and add back x86 support via an extra Rosetta 2 translation layer. It's the only way.But again, none of this has anything to do with the fact that ARM has the word "RISC" in their name. ARM ISA went though multiple iterations, and current 64-bit ARM is a huge departure from the old ARM1/2/3 instruction sets.

Let me give you another example. The new 24" iMac is only 11.5 mm thick and weighs just 4.5 kg. Yes, Apple prioritized thin and light for years, but that doesn't mean without this head start other companies could make an USB-A port fit at the back of an iMac. The USB-A plug is too deep, it would literally poke out at the front. They could barely fit in a headphone jack from the side. And on an iPad you can't fit a headphone jack at all.

If you want your laptop to be light to carry around, you have to make it thin. Because volume equals weight. The thicker laptop will always be the heavier one. So you absolutely need to cut native support for the vast ecosystem of USB-A devices or else you put a limit on progress. Mac mini and Mac Pro can keep USB-A ports, but none of Apple's other devices. You can still support the old industry standard via dongles, but in no other way.

And now back to Metal and why Apple simply can't support triple-A games, which rely on old legacy APIs designed to run on Nvidia cards the size and prize of a house. It would absolutely destroy the MacBook Pro and everything that's good about it. Game studios better target Apple Silicon or they've lost me and billions of others as their customer.

Wait, what?And now back to Metal and why Apple simply can't support triple-A games, which rely on old legacy APIs designed to run on Nvidia cards the size and prize of a house. It would absolutely destroy the MacBook Pro and everything that's good about it. Game studios better target Apple Silicon or they've lost me and billions of others as their customer.

No it wouldn't!

The Switch, which has a processor over 5 years old, can have AAA games. E.g, it had Doom Eternal published.

ANY of the Mx processors are much more powerful than a Switch is, meaning any of them can have AAA games too.

If Apple made an effort, even the iPhone could have AAA games published to it, because its processor is very powerful as well.

At this point, it's not a matter of processing power anymore. It's about Apple not supporting eGPUs, the VulkanAPI and killing 32-bit compatibility with old games.

Now, Apple CAN kill 32-bit compatibility. But it must offer something in return to attract game studios.

More nonsense! First of all the iPhone never needed a fan, so it fits well into any thermal envelope. And with high-performance and high-efficiency cores there are at least two power consumption levels. But more importantly, the process node, battery capacity and core count change with every new iPhone generation. So it's absolute nonsense to target a specific per core power consumption. The whole system must be in a good power-vs-performance balance to deliver so-called "all-day battery life". How many hours that means remains vague. The cores rarely run at 100% utilization and consume far less than the maximum anyway. Apple's design goal is that the consumer experience is that overnight charging is enough. They don't give a **** about any artificial 5W limit.

You know, you remind me of some philosophers of old. Experimentation, fact checking — only losers need that! Everything can be discovered using only a power of thought!

But seriously, go look at the tests. It should be very clear that peak performance at ~5 watts is a core design metric of Apple CPU team.

The nonsense continues. RISC started as an experiment. Nobody knew how big the efficiency gains of a reduced instruction set might be. They discovered by accident that their new chips even ran at incredible low power. At the beginning this was nothing but a curiosity. Initially ARM wanted to build more powerful desktop CPUs. They didn't target the low-power market, because such a market didn't exist in volumes back then.

Of course there was no "low power market". You are talking about the time when microprocessors consumed 2-3 watts.

But the basis underneath is still RISC.

What does this even mean? The original "RISC" mean "one instruction is one hardware CPU operation". We are long past that. Why do you still call it RISC? The main difference between ARM and x86 nowadays is fixed instruction width vs. variable instruction width and load/store architecture instead of reg/mem architecture (which is used as load-store in real programs anyway). When it comes to specialised instructions or addressing modes, ARM is way more complex.

which rely on old legacy APIs

Vulkan was released in 2016. DX12 was released in 2015. "Old"? Ok.

designed to run on Nvidia cards the size and prize of a house.

Funny thing to say considering that Apple GPUs are 4-5x more expensive for the same performance level.

But it must offer something in return to attract game studios.

They do. Reliable high performance even on entry-level devices, easy to use unified software platform, excellent developer tools. Every Apple Silicon device is essentially a gaming console. The main problem is the user base. If game studios believe that developing for Mac is profitable, they would. Which basically means that Mac users need to buy more games.

They do. Reliable high performance even on entry-level devices, easy to use unified software platform, excellent developer tools.

They don't, or else gaming studios would be with them. Whatever they offer is not attractive enough for gaming studios to join the ride.

Just offering good hardware is not enough. Consoles are riddled with examples of good hardware and either very complicated development tools (e.g, Saturn) to poor marketing (Dreamcast) or the platform not being considered commercially viable (Ouya).

Apple just isolated themselves by killing off Vulkan / eGPU support, blocking Nvidia / AMD drivers, and so on. Period. Developers are not going to spend millions JUST to port to Metal if they feel it's not worth the benefit or if Apple will break software compatibility soon (which they do quite often with their APIs).

They don't, or else gaming studios would be with them. Whatever they offer is not attractive enough for gaming studios to join the ride.

Just offering good hardware is not enough. Consoles are riddled with examples of good hardware and either very complicated development tools (e.g, Saturn) to poor marketing (Dreamcast) or the platform not being considered commercially viable (Ouya).

Apple just isolated themselves by killing off Vulkan / eGPU support, blocking Nvidia / AMD drivers, and so on. Period. Developers are not going to spend millions JUST to port to Metal if they feel it's not worth the benefit or if Apple will break software compatibility soon (which they do quite often with their APIs).

You say that, but it's not what is really happening. Even before Metal, when Apple used industry standard OpenGL, there were virtually no "big" gaming titles on the Mac. Main reason: profitability and extreme difficulty of supporting the platform. There was a time when Mac gaming thrived, but that was very long ago, before the modern age of PC gaming.

Two years ago, when M1 was introduced, there were hopes that this will lead to the new gaming Renaissance for the Mac. This hasn't really happened, at least not yet. But the simple fact is that gaming on Apple Silicon is now in the best state than Mac gaming was in the entire history of Intel Macs. It's the first time ever that we have big traditionally PC-only titles (like AAA shooters) running natively on the Mac, and it's the first time that we can play games with decent framerates and quality. And this improvement of gaming quality slowly started when Metal was introduced on the Mac, simply because the devs filly got access to predictable GPU API rather than the mess that was OpenGL. Sure, Bethesda, Ubisoft and Rockstar won't care. But it's not like they cared before. And it's not like they would care if Apple supported Vulkan either. If someone is interested in developing for Macs, they will do it. If they are not interested, Vulkan won't help.

P.S. Another fun fact is that standard APIs create an illusion of a standard platform. But the platform is not standard. AMD, Nvidia, Intel all support different extensions and feature sets and all require slightly different approaches to achieve best possible performance. What works well on one device might not work well on another one. That's why Nvidia drivers inject custom-optimised shaders for popular games. This is the actual hard step of optimisation. Metal on the other hand truly offers a unified platform. If your software architecture is sound, porting a custom engine to Metal is a much smaller deal than you'd think. Of course, most games use readily available gaming engines which already support Metal.

Last edited:

You say that, but it's not what is really happening. Even before Metal, when Apple used industry standard OpenGL, there were virtually no "big" gaming titles on the Mac. Main reason: profitability and extreme difficulty of supporting the platform. There was a time when Mac gaming thrived, but that was very long ago, before the modern age of PC gaming.

Two years ago, when M1 was introduced, there were hopes that this will lead to the new gaming Renaissance for the Mac. This hasn't really happened, at least not yet. But the simple fact is that gaming on Apple Silicon is now in the best state than Mac gaming was in the entire history of Intel Macs. It's the first time ever that we have big traditionally PC-only titles (like AAA shooters) running natively on the Mac, and it's the first time that we can play games with decent framerates and quality. And this improvement of gaming quality slowly started when Metal was introduced on the Mac, simply because the devs filly got access to predictable GPU API rather than the mess that was OpenGL. Sure, Bethesda, Ubisoft and Rockstar won't care. But it's not like they cared before. And it's not like they would care if Apple supported Vulkan either. If someone is interested in developing for Macs, they will do it. If they are not interested, Vulkan won't help.

At least before, you could run Windows with bootcamp and plug an eGPU. This WOULD give access to all the games in Windows.

The platform is in a worse state now because you only have virtualization as an option, you can't upgrade your GPU and the previous native 32-bit ports won't work. Sure, there's Crossover to run Windows versions of those games, but it doesn't work all the time, and once Rosetta 2 is gone (probably sooner rather than later), then Crossover will be in a tight spot.

It's the first time ever that we have big traditionally PC-only titles (like AAA shooters) running natively on the Mac, and it's the first time that we can play games with decent framerates and quality.

That doesn't really mean much when your only native options are Resident Evil Village, League of Legends and Starcraft 2. Anything else either relies on virtualization or Crossover.

Oh, I also hear gamers complain that Apple's monitors are slow for games that require low delay, such as shooters. That's a bit harder to fix.

At least before, you could run Windows with bootcamp and plug an eGPU. This WOULD give access to all the games in Windows.

Are we talking about Mac gaming or Windows gaming? Sure, you could use Intel Macs as a Windows PC, but is that really how you want "Mac gaming" to look like?

That doesn't really mean much when your only native options are Resident Evil Village, League of Legends and Starcraft 2. Anything else either relies on virtualization or Crossover.

That's a bit of an exaggeration, don't you think?

Are we talking about Mac gaming or Windows gaming? Sure, you could use Intel Macs as a Windows PC, but is that really how you want "Mac gaming" to look like?

It's not ideal to use Windows on a Mac to game, but what's important is that the user will only need ONE computer. That clearly saves money and is more practical (no need to carry two computers), so that's very relevant.

That's a bit of an exaggeration, don't you think?

No. How many recent native games do you think were released?

https://doesitarm.com/games only lists 5.9% games as Apple Silicon native games (no Rosetta / virtualization / Crossover) required.

I counted here, and it only gives me TWELVE games. That's only TWELVE games TWO years after the release of Apple Silicon. That's not even considering some of the games being counted aren't AAA games (e.g, Stardew Valley, Among Us).

It's REALLY bad.

That list is misleading (BG3 is native, but is listed as needing Rosetta2).It's not ideal to use Windows on a Mac to game, but what's important is that the user will only need ONE computer. That clearly saves money and is more practical (no need to carry two computers), so that's very relevant.

No. How many recent native games do you think were released?

https://doesitarm.com/games only lists 5.9% games as Apple Silicon native games (no Rosetta / virtualization / Crossover) required.

I counted here, and it only gives me TWELVE games. That's only TWELVE games TWO years after the release of Apple Silicon. That's not even considering some of the games being counted aren't AAA games (e.g, Stardew Valley, Among Us).

It's REALLY bad.

Register on MacRumors! This sidebar will go away, and you'll see fewer ads.