i have two 970 evo, both 2tb each, both have the exact same data files(music sessions/pics/installers blah blah blah) and both are at 1.2 tb capacity, i dont save a whole lot but i do make small saves quite often but i feel no throttling, and they do tend to run slightly hot so i bought heatsinks for them and they work decent, keeps the temps in checkSure. Good point. currently i use a 1tb 970 pro as datadrive and would like to replace it by a 2tb and cheaper nvme and put the 970 into a nvme enclosure. But

Instead of a titan ridge i went the usb-c card route, wich ofcourse will limit the speed of my 970 even more. But this is ok for me as long i get constant read and write speeds at arround 900 mb- as external ssd thats better than the samsung t7.

Ss a 970 pro replacement I know the evo plus is a potential candidate but from what i read it can get quite hot and throttles after arround 100 gb … so are there alternatives in that pricerange that dont slowdown the write speed?

Got a tip for us?

Let us know

Become a MacRumors Supporter for $50/year with no ads, ability to filter front page stories, and private forums.

MP All Models PCIe SSDs - NVMe & AHCI

- Thread starter MisterAndrew

- WikiPost WikiPost

- Start date

- Sort by reaction score

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

- Status

- The first post of this thread is a WikiPost and can be edited by anyone with the appropiate permissions. Your edits will be public.

thanks for chiming in. I also transfer audiofiles, mostly from an external drive to an internal. Sometimes 250 gb up to 1 tb.

a Heatsink of couse is a good idea. But i doubt the throttling after ca 100gb with the evo plus is heat related - isn't it due to a full cache?

I rechecked the listed 2tb nvmes in this thread and from what i see the evo plus seems to have the best price.

The other ssds are more expensive.

Evo plus would be perfect if it wont throttle after 100 gb… dont really care about the rather high temps in comparison to other nvmes. Am i miss something here?

a Heatsink of couse is a good idea. But i doubt the throttling after ca 100gb with the evo plus is heat related - isn't it due to a full cache?

I rechecked the listed 2tb nvmes in this thread and from what i see the evo plus seems to have the best price.

The other ssds are more expensive.

Evo plus would be perfect if it wont throttle after 100 gb… dont really care about the rather high temps in comparison to other nvmes. Am i miss something here?

Last edited:

@krakman do you still have the SN770 working correctly with your late-2013 Mac Pro?Update 26 Nov 2022: 4 months use without any further problems. Rock solid!

I installed Win 10 via bootcamp and that works well too, although I only use it for the WD NVME drive utility program.

Update: I spent the last 4 days editing a couple of wedding videos in FCPX and the Trashcan has been rock solid.

No KPs, no hangs, no beachball.

I assume the initial problems I experienced were either - a faulty NVME adapter or dirty/poor contacts or something that was fixed in Monterey 12.5

View attachment 2043764

WD SN770 2TB NVME now working fine in Trashcan MP

Last month when I installed the SSD it would KP when transferring large files, although intermittent it would KP regularly during the day and made editing video impractical.

I wiped the SSD and then reinstalled it with another NVME adaptor ( same brand but different unit) This time I cleaned all the contacts thoroughly before installing.

The drive have been working fine now for several days, I have installed Win 10 Via bootcamp (previously this would KP during installation) and I have copied over a 136GB FCPX video project (previously this caused a KP)

The only other difference since the first installation is the release of Monterey 12.5 (could that have fixed something???)

So far its been 3 days without a single KP!

View attachment 2042217

THanks all to this wonderful thread especially to tsialex who provides so much knowledge and sound advice.

All of this upgrading stuff is hard work for me and lots to get my head around: SO it seems the 970 Evo plus is the way to go, but all the controller options confuse me.

I've got a 5,1 with an rx580 and am planning on one more upgrade to Monterey. (currently on Mojave without boot screen and very reliable)

I just want the most reliable controller preferably with room for 2 cards or more, rather than pure speed (even if I get 1500mbs which is a lot faster than my sata ssd

The 7101a controller is REALLY expensive and I'm confused about when I put heat sinks or not?

Also, what slot do I put the card in? Not sure how it will all fit with my USB pci and the gpu.

All of this upgrading stuff is hard work for me and lots to get my head around: SO it seems the 970 Evo plus is the way to go, but all the controller options confuse me.

I've got a 5,1 with an rx580 and am planning on one more upgrade to Monterey. (currently on Mojave without boot screen and very reliable)

I just want the most reliable controller preferably with room for 2 cards or more, rather than pure speed (even if I get 1500mbs which is a lot faster than my sata ssd

The 7101a controller is REALLY expensive and I'm confused about when I put heat sinks or not?

Also, what slot do I put the card in? Not sure how it will all fit with my USB pci and the gpu.

Just to add compatibility (with a simple pcie to 1x NVME adapter)

Patriot VPN100 is working perfectly and booting on both my MP3,1 (with firmware injections for NVME boot and APFS) and MP5,1 (firmware 144.0.0.0) on Mojave patched (3,1) Ventura via OCLP (3,1 + 5,1) Vanilla Mojave (5,1).

In both 3,1 and 5,1 machines at speeds of 1450MB/s R/W

Patriot VPN100 is working perfectly and booting on both my MP3,1 (with firmware injections for NVME boot and APFS) and MP5,1 (firmware 144.0.0.0) on Mojave patched (3,1) Ventura via OCLP (3,1 + 5,1) Vanilla Mojave (5,1).

In both 3,1 and 5,1 machines at speeds of 1450MB/s R/W

I'm trying to get a better understanding of IOPS from PCIE 4.0 NVME ssds in a PCIE 3.0 slot. I'm currently running a SSD7104 card in my 7,1 in a 16x slot, so it's getting full bandwidth.

But I have my boot NVME (a SK Hynix P41 Platinum) running in one of its 3.0 x4 slots. I understand that its sustained read/write speed is essentially cut in half, which doesn't matter to me for this use case.

But what about its random read/write speed? I'm under the impression that its use case in this scenario isn't hampered, but can someone with better knowledge of this confirm? This ssd is rated at 1.4M IOPs read and 1.3M IOPs write I believe. Does that get close to saturating a PCIE 3.0 x4 slot?

But I have my boot NVME (a SK Hynix P41 Platinum) running in one of its 3.0 x4 slots. I understand that its sustained read/write speed is essentially cut in half, which doesn't matter to me for this use case.

But what about its random read/write speed? I'm under the impression that its use case in this scenario isn't hampered, but can someone with better knowledge of this confirm? This ssd is rated at 1.4M IOPs read and 1.3M IOPs write I believe. Does that get close to saturating a PCIE 3.0 x4 slot?

But what about its random read/write speed? I'm under the impression that its use case in this scenario isn't hampered, but can someone with better knowledge of this confirm? This ssd is rated at 1.4M IOPs read and 1.3M IOPs write I believe. Does that get close to saturating a PCIE 3.0 x4 slot?

For your setup - IOPs and random read/write performance are bound by the 7104 and T2 (if activated and volume was encrypted). PCIe gen and bus width are only relevant for sustained transfers and your max as per your configuration is 4GB/s (1GB/s per lane).

I've seen raid configs on the 7104 limited to 200K ~ 250K IOPs on 1M+ IOPs blades so YMMV. Try testing with T2 enabled and disabled and measure your IOPs.

For optimal random read/write performance, convert the LBA size of your P41 from 512 to 4K.

Thank you for this. I wouldn't have have known that the 7104 can limit the IOPs performance. I thought it primarily did nothing more but divide the PCIE 3.0 x16 lanes to PCIE 3.0 x4 lanes equally among its 4 ports and nothing more.For your setup - IOPs and random read/write performance are bound by the 7104 and T2 (if activated and volume was encrypted). PCIe gen and bus width are only relevant for sustained transfers and your max as per your configuration is 4GB/s (1GB/s per lane).

I've seen raid configs on the 7104 limited to 200K ~ 250K IOPs on 1M+ IOPs blades so YMMV. Try testing with T2 enabled and disabled and measure your IOPs.

For optimal random read/write performance, convert the LBA size of your P41 from 512 to 4K.

Regarding the T2, would encryption slow IOPs performance? I have to double check, but I believe I have the p41 set as non-encrypted.

So theoretically, a max 1M+ IOPs wouldn't be hindered by the PCIe 3.0 x4 speed as it's not a sustained process that would ever saturate the bus at any one given time? Just want to make sure I'm understanding it correctly

Last edited:

Thank you for this. I wouldn't have have known that the 7104 can limit the IOPs performance. I thought it primarily did nothing more but divide the x16 lanes to x4 lanes equally among its 4 ports and nothing more.

Regarding the T2, would encryption slow IOPs performance? I have to double check, but I believe I have the p41 set as non-encrypted.

From what I've seen, the 7104 utilizes the PEX 8747 chip which may or may not be limited in terms of IOPs, depends on several factors or setup ie. raid vs no raid, chip limitations, 512 vs 4K lba sector size of blade, 1 blade vs 2/3/4 being utilized etc.. You may have better chances of achieving the blade's advertised IOPs if 1 or 2 blades at the most are used with the 7104 and with no encryption deployed.

As for T2 capabilities or limitations - no Apple supplied storage or mac has been advertised to handle 1M+ IOPs. I do know that AES-NI on intel cpus is limited at what it can process in terms of IOPs and is a choke point for random reads/writes which is one of the reasons why Apple introduced T2.

Random queue sizes to use when testing are 4K 8K 16K... 1K and 32K+ queues are typically used by servers. For file sizes, start with 4K which is the size blade manufacturers use in their testing (aligning 4K sector size with 4K file test size).

Please share your findings as I'd be interested in seeing how the P41 performs. I'm considering the P44 Pro Solidigm and can't decide between the two.

Adding: These nvme controllers don't just divide, they act as switches managing lanes which transport requests to and from the host and handle other tasks like raiding etc.. If the switch is under load or controller is busy handling raiding or other tasks then expect to see a performance drop in IOPs and throughput.

Last edited:

So theoretically, a max 1M+ IOPs wouldn't be hindered by the PCIe 3.0 x4 speed as it's not a sustained process that would ever saturate the bus at any one given time? Just want to make sure I'm understanding it correctly

Correct.

From what I've seen, the 7104 utilizes the PEX 8747 chip which may or may not be limited in terms of IOPs, depends on several factors or setup ie. raid vs no raid, chip limitations, 512 vs 4K lba sector size of blade, 1 blade vs 2/3/4 being utilized etc.. You may have better chances of achieving the blade's advertised IOPs if 1 or 2 blades at the most are used with the 7104 and with no encryption deployed.

As for T2 capabilities or limitations - no Apple supplied storage or mac has been advertised to handle 1M+ IOPs. I do know that AES-NI on intel cpus is limited at what it can process in terms of IOPs and is a choke point for random reads/writes which is one of the reasons why Apple introduced T2.

Random queue sizes to use when testing are 4K 8K 16K... 1K and 32K+ queues are typically used by servers. For file sizes, start with 4K which is the size blade manufacturers use in their testing (aligning 4K sector size with 4K file test size).

Please share your findings as I'd be interested in seeing how the P41 performs. I'm considering the P44 Pro Solidigm and can't decide between the two.

Adding: These nvme controllers don't just divide, they act as switches managing lanes which transport requests to and from the host and handle other tasks like raiding etc.. If the switch is under load or controller is busy handling raiding or other tasks then expect to see a performance drop in IOPs and throughput.

Thank you. I'm currently running the P41 in one slot as the boot drive, with the other 3 slots acting as a JBOD of NVMEs at the moment.

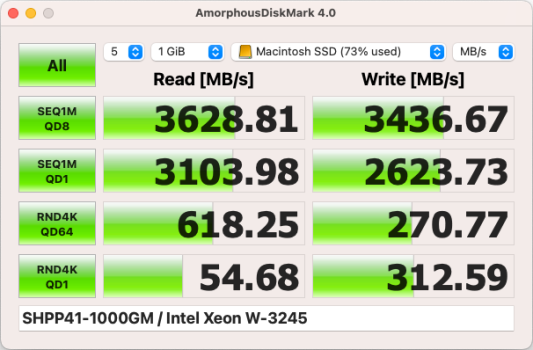

I just ran a quick AmorphousDiskMark test on the P41 for you, if that helps in your decision making. This is with it in the 7104 just FYI.

Attachments

Hello everybody, i really appreciate your great work & effort here...

Can somebody please tell me if what i am experiencing lately is something others have encountered too..

My machine is a Mac Pro (Early 2009),now 5,1 with 2X X5690, 64 GB RAM, Radeon VEGA 56 8GB & running Monterey 12.6.6 through OCLP v0.6.1...My problem is with the Ableconn PEXM2-130 where the ASM2824 Switch-Chip gets extremely hot & although i adapted an old passive HP Server CPU Copper Heatsink it still heats up dramatically...but my actual Problem that i need help with is a bit different ! When i freshly boot the System the NVMe speeds measured with Black Magic are ca. 2700 MB/s with my dual assembly of one 1 TB WD_Black SN850 & one 2TB Samsung 970 EVO Plus which is great of course, but after the System wakes from Sleep then the Black Magic & Amorphous Read/Write speeds are ca. 375 MB/s regardless the perceived impression that everything is still fast, snappy & Data transfers between the two blades are very fast...Is this a Mac OS or a OCLP bug or is this a defect that the Ableconn Card is having or is this a Problem of my particular setup ???

Can somebody please tell me if what i am experiencing lately is something others have encountered too..

My machine is a Mac Pro (Early 2009),now 5,1 with 2X X5690, 64 GB RAM, Radeon VEGA 56 8GB & running Monterey 12.6.6 through OCLP v0.6.1...My problem is with the Ableconn PEXM2-130 where the ASM2824 Switch-Chip gets extremely hot & although i adapted an old passive HP Server CPU Copper Heatsink it still heats up dramatically...but my actual Problem that i need help with is a bit different ! When i freshly boot the System the NVMe speeds measured with Black Magic are ca. 2700 MB/s with my dual assembly of one 1 TB WD_Black SN850 & one 2TB Samsung 970 EVO Plus which is great of course, but after the System wakes from Sleep then the Black Magic & Amorphous Read/Write speeds are ca. 375 MB/s regardless the perceived impression that everything is still fast, snappy & Data transfers between the two blades are very fast...Is this a Mac OS or a OCLP bug or is this a defect that the Ableconn Card is having or is this a Problem of my particular setup ???

Last edited:

It can be from OCLP patch, power control maybe is not working as it should, I have seen weird behaviour on sleep/wake on my 4,1(5,1) on OCLP and Ventura, so I decided to not let the Mac sleep when the monitor sleepsHello everybody, i really appreciate your great work & effort here...

Can somebody please tell me if what i am experiencing lately is something others have encountered too..

My machine is a Mac Pro (Early 2009),now 5,1 with 2X X5690, 64 GB RAM, Radeon VEGA 56 8GB & running Monterey 12.6.6 through OCLP v0.6.1...My problem is with the Ableconn PEXM2-130 where the ASM2824 Switch-Chip gets extremely hot & although i adapted an old passive HP Server CPU Copper Heatsink it still heats up dramatically...but my actual Problem that i need help with is a bit different ! When i freshly boot the System the NVMe speeds measured with Black Magic are ca. 3700 MB/s with my dual assembly of one 1 TB WD_Black SN850 & one 2TB Samsung 970 EVO Plus which is great of course, but after the System wakes from Sleep then the Black Magic & Amorphous Read/Write speeds are ca. 375 MB/s regardless the perceived impression that everything is still fast, snappy & Data transfers between the two blades are very fast...Is this a Mac OS or a OCLP bug or is this a defect that the Ableconn Card is having or is this a Problem of my particular setup ???

i see no one has replied to your question so ill attempt, im not familiar with the 7101a pcie card, just googled and looked for a bit, $400 is what any pcie card with space for 4 nvme should cost(give or take). it doesnt look like you can put any heats sinks on them due to the cover(which might be a heatsink, not sure), they say it supports raid configuration, dont know if you want that though. i have a lycom dt 120($25) which holds one single nvme and it hasnt failed me in over a year, they also make a lycom dt 130 that holds 2 nvme ($130-$150) that is a decent jump in price but it seems worth it, i wouldve bought it had i known about it. you do have 4 slots so you should have 2 available with gpu and usb card and you can put these cards in any slot, doesnt matter..as far as heat sinks go, they run about $10 to $15 each and i would recommend them to keep the heat down a littleTHanks all to this wonderful thread especially to tsialex who provides so much knowledge and sound advice.

All of this upgrading stuff is hard work for me and lots to get my head around: SO it seems the 970 Evo plus is the way to go, but all the controller options confuse me.

I've got a 5,1 with an rx580 and am planning on one more upgrade to Monterey. (currently on Mojave without boot screen and very reliable)

I just want the most reliable controller preferably with room for 2 cards or more, rather than pure speed (even if I get 1500mbs which is a lot faster than my sata ssd

The 7101a controller is REALLY expensive and I'm confused about when I put heat sinks or not?

Also, what slot do I put the card in? Not sure how it will all fit with my USB pci and the gpu.

I was thinking of adding a large nvme single drive to my 7,1 which is pci3 i understand

- Does the crucial P3 work well in a 7,1 ?

- what brands have you used successfully ?

- what about 4-8TB ssds ?

You can connect gen 4 NVMe in Mac7,1 and use them at gen 4 speed if they are connected to a gen 4 PCIe switch such as the HighPoint 7505.I was thinking of adding a large nvme single drive to my 7,1 which is pci3 i understand

- Does the crucial P3 work well in a 7,1 ?

- what brands have you used successfully ?

- what about 4-8TB ssds ?

https://forums.macrumors.com/threads/pcie-ssds-nvme-ahci.2146725/post-29483973

For single drive, any gen NVMe of any size should work. In the MacPro7,1, you'll get full performance from a single gen 3 or earlier NVMe without the need for an expensive PCIe switch. A gen 4 NVMe will work only up to gen 3 speed in the MacPro7,1 without a PCIe switch.

I have a Mac Pro 5.1 (mid 2010). I am about to buy a compatible NvMe and card for it that is compatible. I have the latest firmware for my Mac, running both High Sierra and Ventura on two separate SSD.

I wonder if those from Amazon store will work? Or what is recommended to buy for a reasonable price?

Any help would be sincerely appreciated.

ADWITS PCI Express 3.0 4 x 8 x 16 x till M.2 NVMe och AHCI SSD-adaptercard

Samsung 970 EVO Plus 1 TB PCIe NVMe M.2 (2280)

I wonder if those from Amazon store will work? Or what is recommended to buy for a reasonable price?

Any help would be sincerely appreciated.

ADWITS PCI Express 3.0 4 x 8 x 16 x till M.2 NVMe och AHCI SSD-adaptercard

Samsung 970 EVO Plus 1 TB PCIe NVMe M.2 (2280)

if you want single drive and cheap use a lycom dt 120 with a 970evo if you want a double use lycom dt 130

Thanks for the quick reply.if you want single drive and cheap use a lycom dt 120 with a 970evo if you want a double use lycom dt 130

Seems like it’s not available on Swedish Amazon, i see Lycom 129,130,131 and 131da, the dual 130 is too expensive for me. Would any other of these work, or are there other brands that works

I've been very happy with the RIITOP NVME M.2 card from Amazon. It has a beefy heatsink. It's a non-switched card, so you'll top out at ~1400 MB/sec, but should only cost about 15-20 euro. And yes the 970 evo plus has been working for me.

That Lycom dt 120 did not appear to have a heatsink, not sure I would recommend one like that.

That Lycom dt 120 did not appear to have a heatsink, not sure I would recommend one like that.

unfortunately i don't know about those variations..im sorry..hopefully someone can give you the proper guidance..

@Jill Valentine

When compared to the basic DT120 adapter, the DT130 includes a ASM2824 switch. So you get double the potential speed (3000mbps max VS 1500mbps max) and the capacity to host 2 pcie nvme ssds on the one adapter.

If you want *sustained* super high speed transfers you need an adapter with a switch (PLX8747 or ASM 2824) and you can only use Samsung 970 Pro SSDs (this was the last NVMe SSD manufactured using 2-bit MLC NAND). You can usually purchase a 970 Pro from eBay.

Although it sounds like an unusual claim, there is NO OTHER COMBINATION of adapter and ssd that lets you experience super fast data transfers (6000 mbps sustained on the Mac Pro 5,1) without bumping up against 'disk throttling' constraints.

All NVMe SSDs sold today use much cheaper and slower 3-bit TLC NAND. These disks 'go fast' for the first 20-30 seconds, then the transfer speeds typically fall to 850-1100mbps.

Essentially this means if you don't want (or) need the ability to transfer content at 5000+ mbps, then the best adapter and ssd combination you can buy (IMHO) is the Sabrent 4-Drive NVMe Adapter Card + up to 4 cheap TLC NAND NVME SSDs.

The Sabrent 4-Drive NVMe Adapter Card is the lowest cost adapter with a switch (ASM1812). You can buy one or two disks now and add more later. In Slot 2 it's capable of reaching 3000 mbps. It's got a nice big heatsink to eliminate thermal throttling issues.

LASTLY - if you RAID the "most affordable" (TLC) NVMe SSDs discussed on this forum, you can overcome the much lower read write speeds that result when the "super speed cache" becomes saturated.

For example: TLC NVMe disk transfer speeds typically fall to 850-1100mbps after the first 20 seconds - so if you configure three SSDs in RAID 0, you can create a super fast (2500+) volume again even after the 'super speed cache' is saturated on 3-bit TLC NAND NVMe SSDs.

When compared to the basic DT120 adapter, the DT130 includes a ASM2824 switch. So you get double the potential speed (3000mbps max VS 1500mbps max) and the capacity to host 2 pcie nvme ssds on the one adapter.

If you want *sustained* super high speed transfers you need an adapter with a switch (PLX8747 or ASM 2824) and you can only use Samsung 970 Pro SSDs (this was the last NVMe SSD manufactured using 2-bit MLC NAND). You can usually purchase a 970 Pro from eBay.

Although it sounds like an unusual claim, there is NO OTHER COMBINATION of adapter and ssd that lets you experience super fast data transfers (6000 mbps sustained on the Mac Pro 5,1) without bumping up against 'disk throttling' constraints.

All NVMe SSDs sold today use much cheaper and slower 3-bit TLC NAND. These disks 'go fast' for the first 20-30 seconds, then the transfer speeds typically fall to 850-1100mbps.

Essentially this means if you don't want (or) need the ability to transfer content at 5000+ mbps, then the best adapter and ssd combination you can buy (IMHO) is the Sabrent 4-Drive NVMe Adapter Card + up to 4 cheap TLC NAND NVME SSDs.

The Sabrent 4-Drive NVMe Adapter Card is the lowest cost adapter with a switch (ASM1812). You can buy one or two disks now and add more later. In Slot 2 it's capable of reaching 3000 mbps. It's got a nice big heatsink to eliminate thermal throttling issues.

LASTLY - if you RAID the "most affordable" (TLC) NVMe SSDs discussed on this forum, you can overcome the much lower read write speeds that result when the "super speed cache" becomes saturated.

For example: TLC NVMe disk transfer speeds typically fall to 850-1100mbps after the first 20 seconds - so if you configure three SSDs in RAID 0, you can create a super fast (2500+) volume again even after the 'super speed cache' is saturated on 3-bit TLC NAND NVMe SSDs.

@zedex

cMP 4.1 flashed 5.1, latest firmware, latest mojave.

Currently crucial p1 1to on a silverstone ecm20 nvme blade, 1400 mb speed in slot 3 (pcie 2.0 x4)

Does the dt 130 bifurcation would improve speed in a pcie x4 port ? With one or 2 blades ?

What do you think of wd an 1500 (hardwaire raid) , an article (one last push mac pro 5.1) a user seems ok with it.

Would it be maxed at 1400 in a pcie 4x any way ?

My rx 580 nitro + is almost blocking the slot 2 x16 , and I am ok with this graphic card, I dont want to play with pixlas mod to get a vega 56/64 that would fit and free the second x16 slot.

I am also looking for a pcie riser card or cable to access the x16 slot, but it seems to me I have to find a way to maximise the x4 slot …

cMP 4.1 flashed 5.1, latest firmware, latest mojave.

Currently crucial p1 1to on a silverstone ecm20 nvme blade, 1400 mb speed in slot 3 (pcie 2.0 x4)

Does the dt 130 bifurcation would improve speed in a pcie x4 port ? With one or 2 blades ?

What do you think of wd an 1500 (hardwaire raid) , an article (one last push mac pro 5.1) a user seems ok with it.

Would it be maxed at 1400 in a pcie 4x any way ?

My rx 580 nitro + is almost blocking the slot 2 x16 , and I am ok with this graphic card, I dont want to play with pixlas mod to get a vega 56/64 that would fit and free the second x16 slot.

I am also looking for a pcie riser card or cable to access the x16 slot, but it seems to me I have to find a way to maximise the x4 slot …

Last edited:

The 5GT/s x4 slot can't do much better than the 1400 MB/s that you already have.I have to find a way to maximise the x4 slot …

Register on MacRumors! This sidebar will go away, and you'll see fewer ads.