Linux, what do you mean behind? Who was behind and came first to market on TB? Who started using USB type C on their machines? Who used FireWire first? Hi Res monitors like Retina as standard?

Do I need to go on?

They're the ones who have no problem whatsoever to try new tech and discard old legacy stuff, you like it or not.

The others follow suit, sometimes even launch their products in a rush just to say they're first but only after Apple has had their release schedule in place, and that's just for the sake of beating Apple to it and say they did it first.

In the past no one really cared much for Apple and PC manufacturers only cared in beating themselves out. Now, Apple is in the cross hairs of everyone.

They're a company to follow and even beat at all cost.

I didn't say TB2 was slow, Apple was

The issue of having eGPUs integrated into the monitor doesn't really cut it for me. I wouldn't need it, it's an added (unnecessary) cost, adds to the power consumption and thermal load in an already tight case, it's another part that can brake and it should not be easy to service, adds noise to a silent workstation and right in front of you...

Nah, that would be a bad idea.

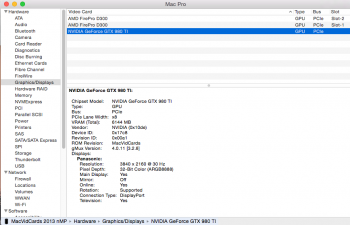

If you need one use an external box. It's easier to upgrade, you can choose the GPU inside, you can hide it from sight if you want.

But in fact (I think dec said it a while back) the TBD is really a docking station and not a stand alone monitor. I'd rather have a monitor only, skip the added ports even, there's plenty of that on the back of the nMP.

MVC, did you even try Google Translate or, as usual, it was something that come out of your big mouth?! Do you put a sticker on the cards you sell saying "it works as eGPU"?